Last article, I said AI Chatbots are getting hotter and hotter. Since then I wonder how I can create my own AI to make chat bots, Q&A systems and my own agents. I find it is relatively easy to use API services such as Chat GPT API. But I would like to create my own AI from scratch with open source models! This is especially good when we want to analyze confidential data as we do not need to use models in public. It must be exciting. Let us start!

Let us choose base models to create our own AI

There are many language models which are open source. It is very important for us to choose the best one as we should keep a balance between the performance of the model and the size of the model. Last week, I found a brand new model called “UL2 20B” from Google Brain. This is led by Mr. Yi Tai, who is Senior Research Scientist at Google Brain, Singapore. This is perfectly open as everyone can download the model and its weights. I am very glad because many LLMs have the limitation to use, such as non-commercial license. When you are interested in the technical details, I strongly recommend reading his blog “A New Open Source Flan 20B with UL2”. This is “must read” for everyone who is interested in LLM.

2. Perform small experiments and see how it works!

I would like to use the famous research paper “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models(2)”. It has a good abstract in it. It says

“We explore how generating a chain of thought -- a series of intermediate reasoning steps -- significantly improves the ability of large language models to perform complex reasoning. In particular, we show how such reasoning abilities emerge naturally in sufficiently large language models via a simple method called chain of thought prompting, where a few chain of thought demonstrations are provided as exemplars in prompting. Experiments on three large language models show that chain of thought prompting improves performance on a range of arithmetic, commonsense, and symbolic reasoning tasks. The empirical gains can be striking. For instance, prompting a 540B-parameter language model with just eight chain of thought exemplars achieves state of the art accuracy on the GSM8K benchmark of math word problems, surpassing even finetuned GPT-3 with a verifier.”

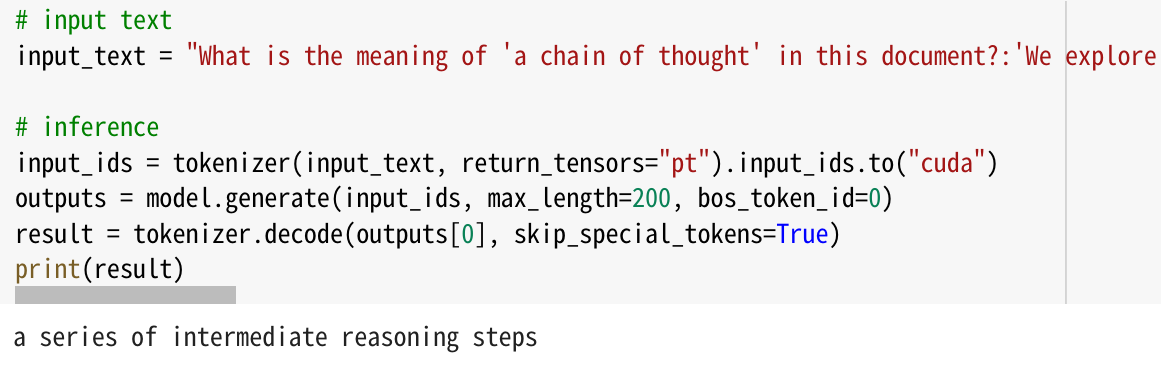

It might be a little difficult to read as there are many technical terms in it, haha. Then I would like to ask two questions about this abstract. The first one is here and I get answer from the model.

Q : "What is the meaning of 'a chain of thought' in this document?

A : a series of intermediate reasoning steps

I put my notebook to show how it works during the experiment.

The second one is

Q : What is the meaning of 'chain of thought prompting' in this document?

A : chain of thought prompting is a method for generating a chain of thought

These questions are slightly different, But the model can answer both of them accurately without confusion. This is incredible! Is the model really free and open source?! I confirm this model is the best of the best to create our own AI in our hand.

As we see, we obtain the best model to create our own AI. Then I would like to consider how to implement the model to use it easily. I will explain it in my next article, stay tuned!

(1) “A New Open Source Flan 20B with UL2” Yi Tai, Senior Research Scientist at Google Brain, Singapore, 3 , March 2023

(2) Chain-of-Thought Prompting Elicits Reasoning in Large Language Models Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Brian Ichter, Fei Xia, Ed Chi, Quoc Le, Denny Zhou Google Research, Brain Team 10, Jan 2023

Copyright © 2023 Toshifumi Kuga All right reserved

Notice: ToshiStats Co., Ltd. and I do not accept any responsibility or liability for loss or damage occasioned to any person or property through using materials, instructions, methods, algorithms or ideas contained herein, or acting or refraining from acting as a result of such use. ToshiStats Co., Ltd. and I expressly disclaim all implied warranties, including merchantability or fitness for any particular purpose. There will be no duty on ToshiStats Co., Ltd. and me to correct any errors or defects in the codes and the software.