Happy New Year!

As we finally step into 2026, I am sure many of you are keenly interested in how AI agents will develop this year. Therefore, I would like to make some bold predictions by raising three key points, while also considering their connection to machine learning. Let's get started.

1. A Dramatic Leap in Multimodal Performance

I believe the high precision of the image generation AI "Nano Banana Pro (1)," released by Google on November 20, 2025, likely stunned not just AI researchers but the general public as well. Its ability to thoroughly grasp the meaning of a prompt and faithfully reproduce it in an image is magnificent, possessing a capability that could be described as "Text-to-Infographics."

Furthermore, its multilingual capabilities have improved significantly, allowing it to perfectly generate Japanese neon signs like this: "明けましておめでとう 2026" (Happy New Year 2026)

"明けましておめでとう 2026" (Happy New Year 2026)

This model is not a simple image generation AI; it is built on top of the Gemini 3 Pro frontier model with added image generation capabilities. That is why the AI can deeply understand the user's prompt and generate images that align with their intent. Google also possesses AI models like Genie 3(2) that perform simulations using video, leading the industry with multimodal models. We certainly cannot take our eyes off their movements in 2026.

2. The Explosive Popularity of "Agentic Coding"

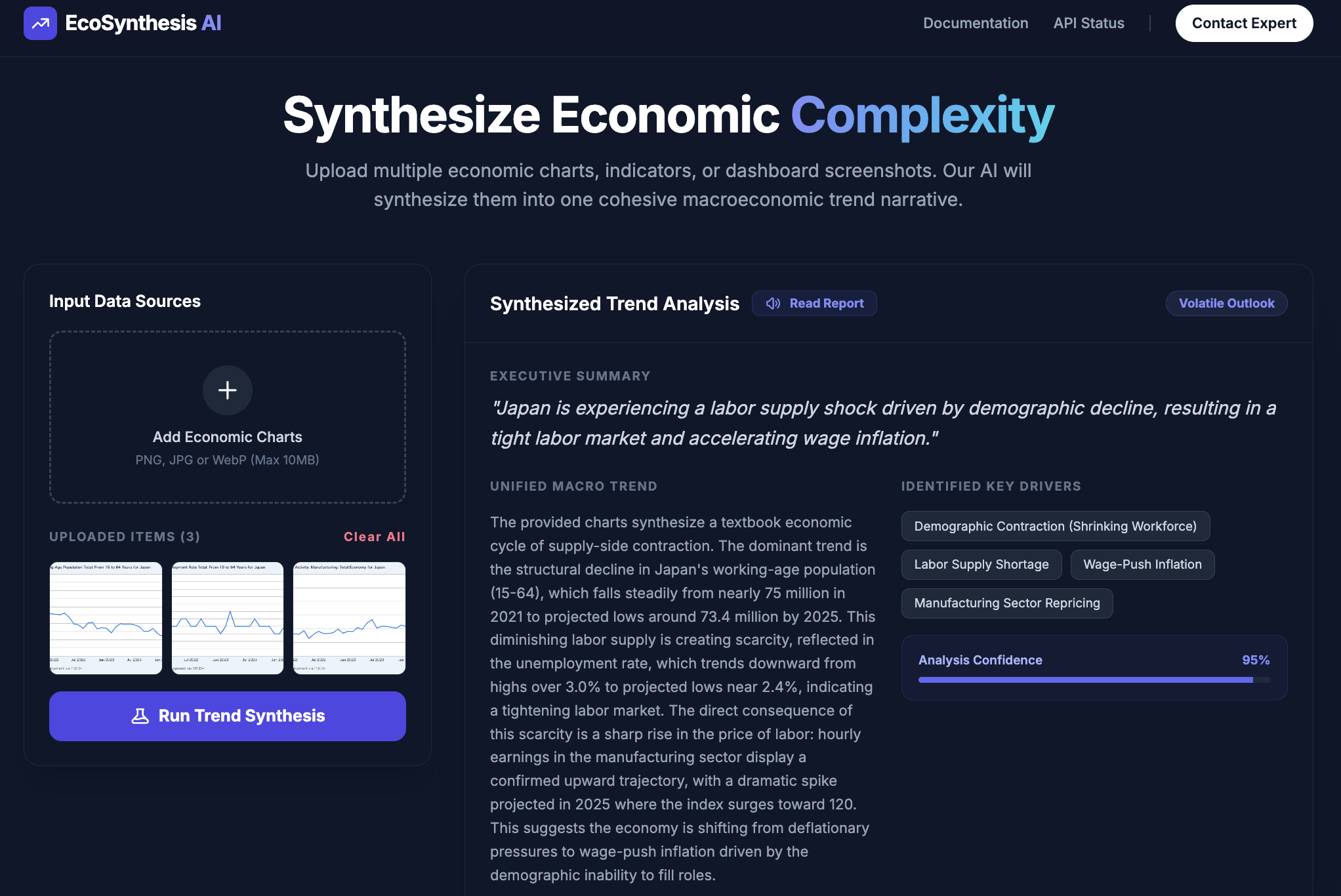

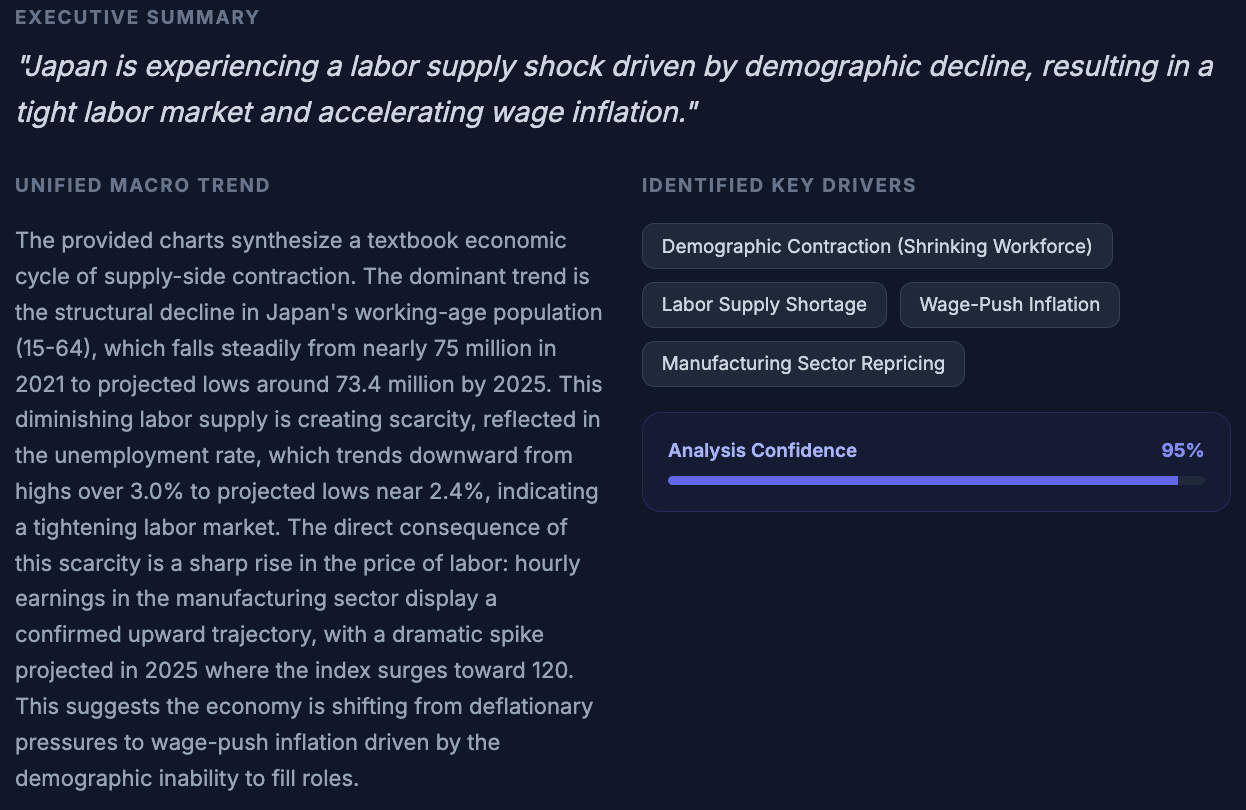

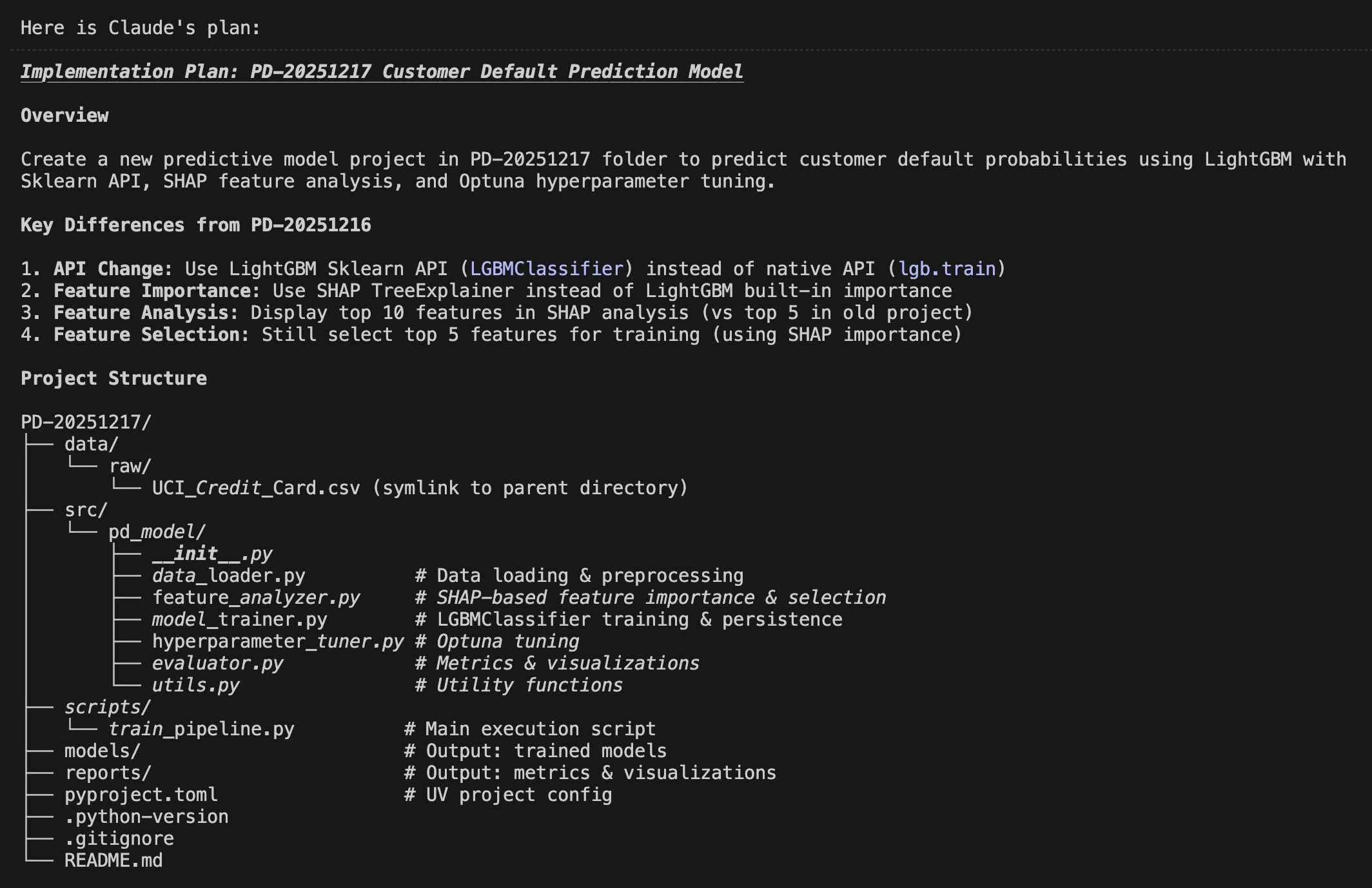

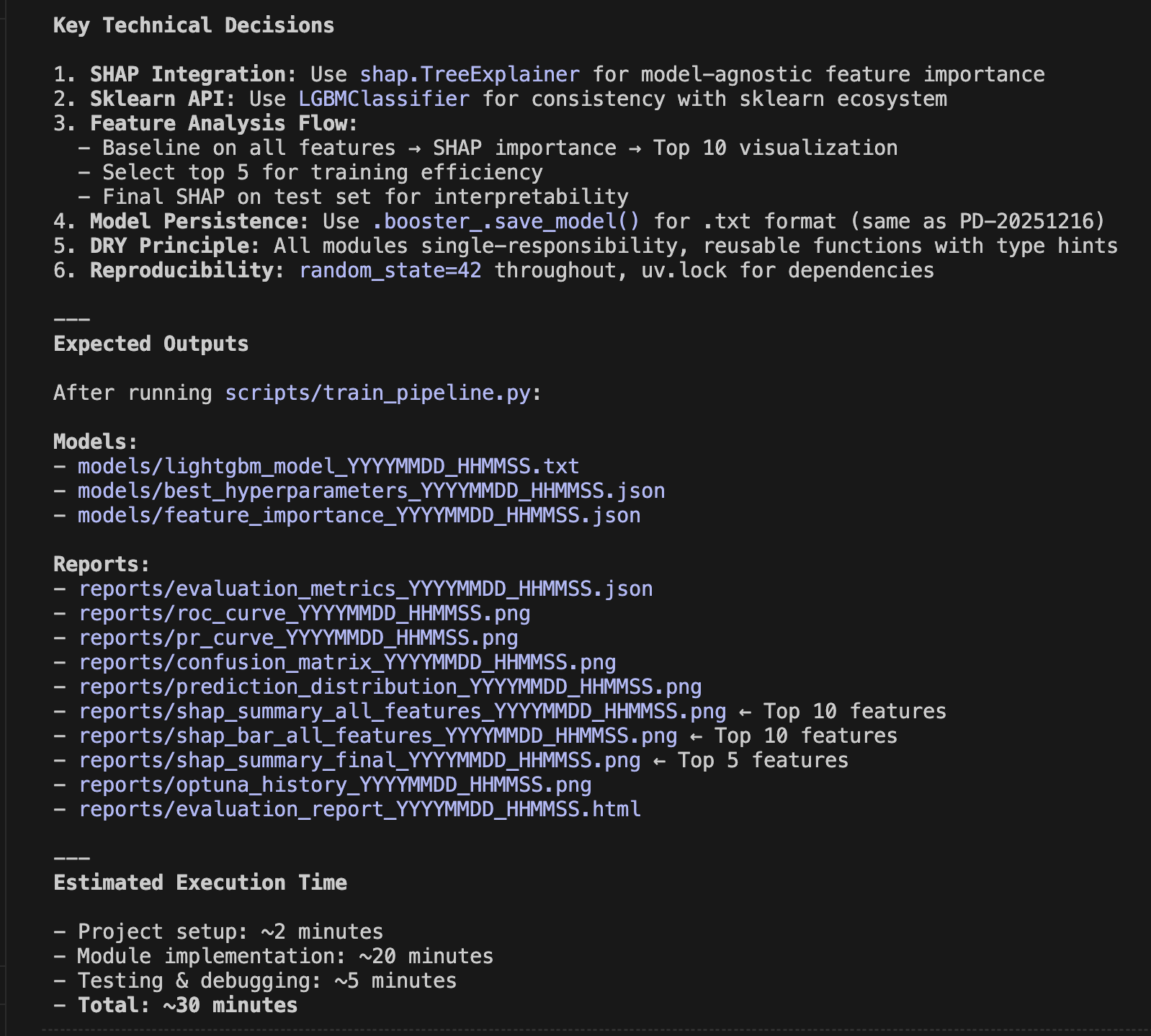

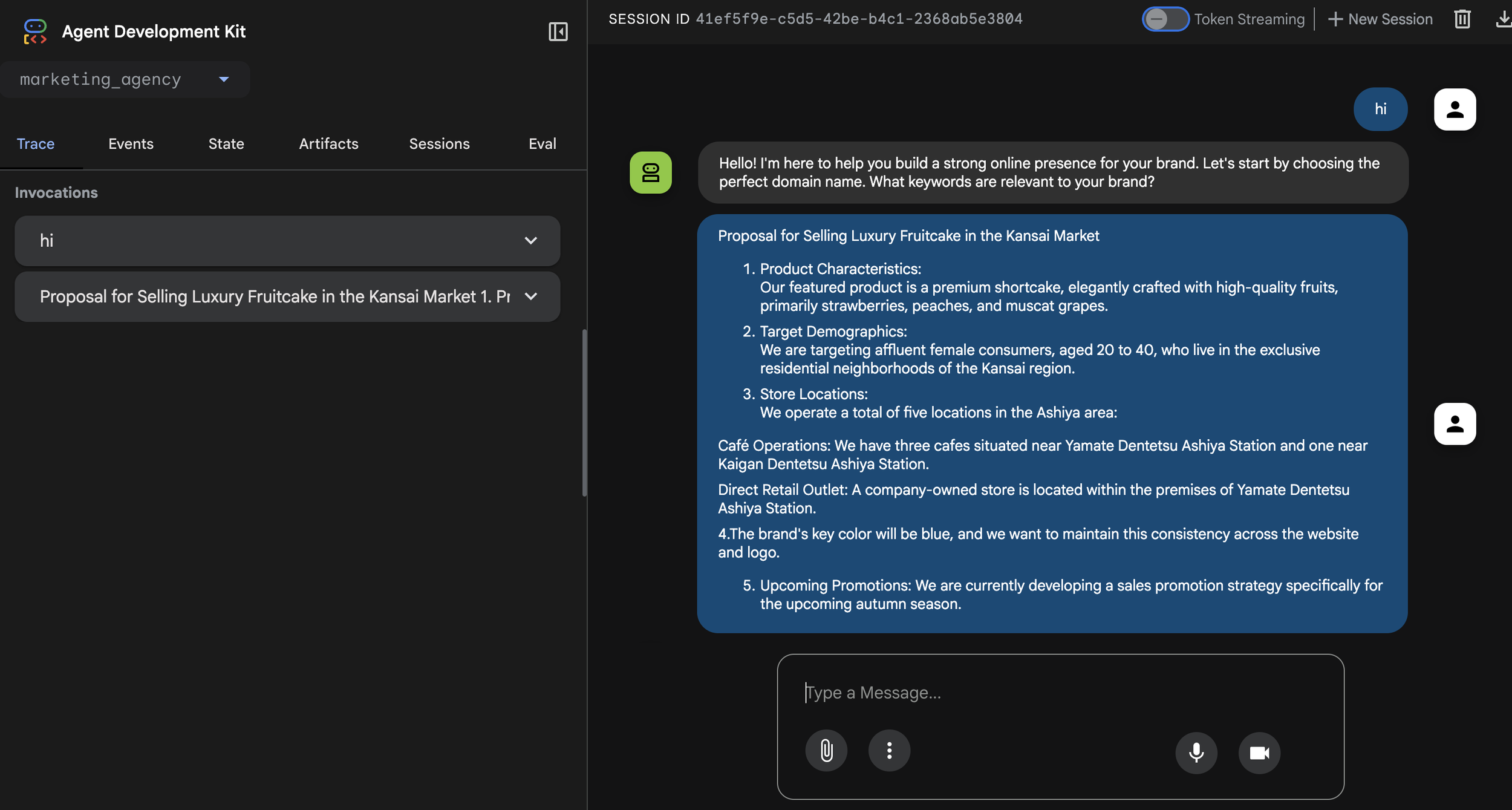

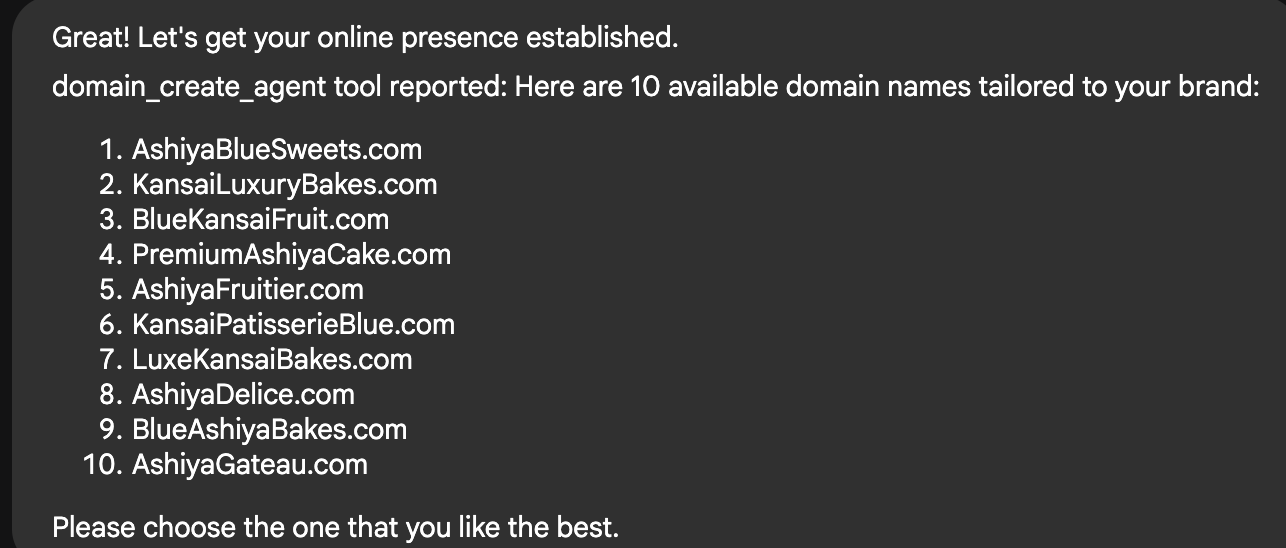

Currently, coding by AI agents—"Agentic Coding"—has become a massive global movement. However, for complex code, it is not yet 100% perfect, and human review is still necessary. Additionally, humans still need to create the Product Requirement Document (PRD), which serves as the blueprint for implementation.

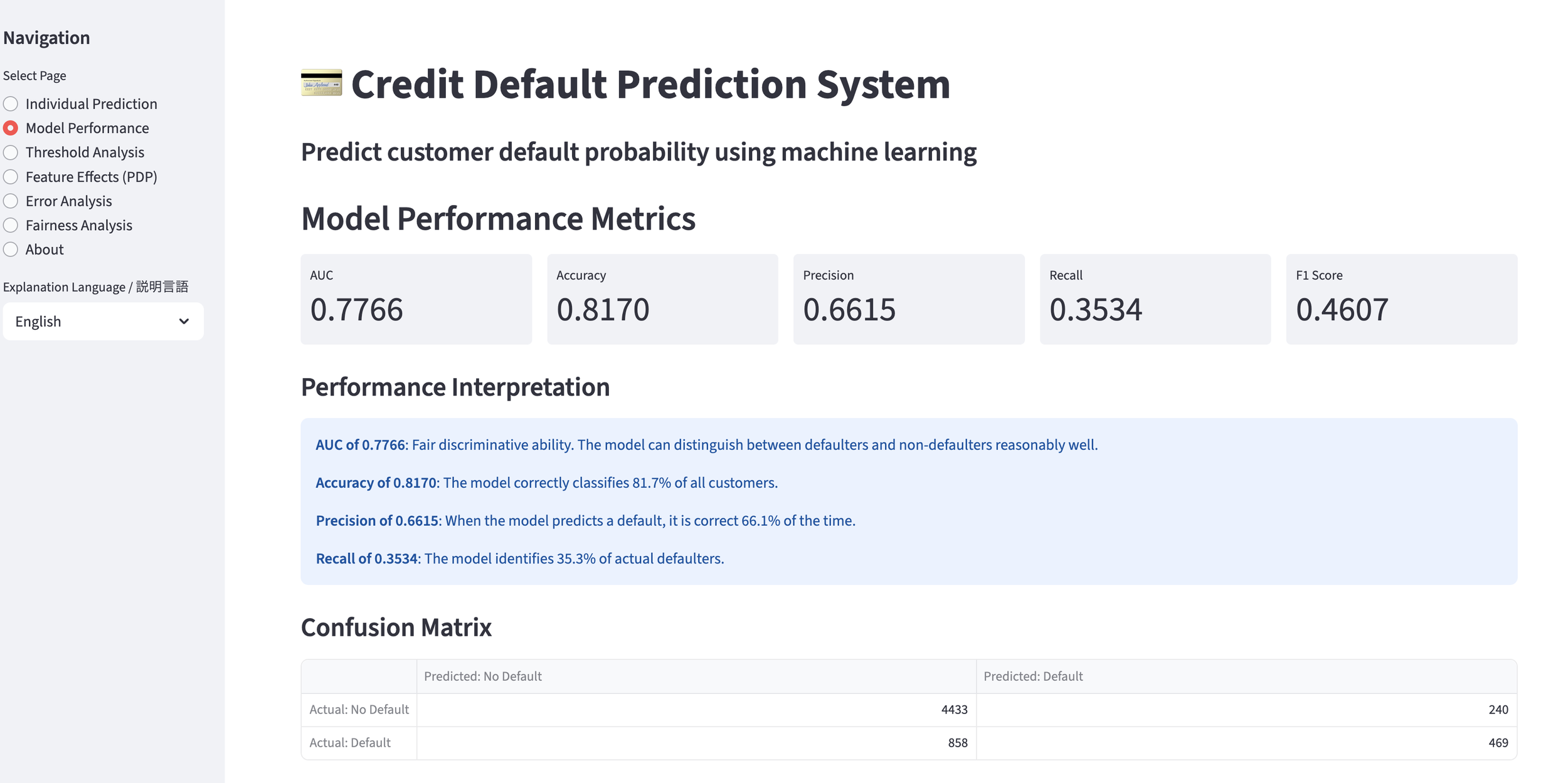

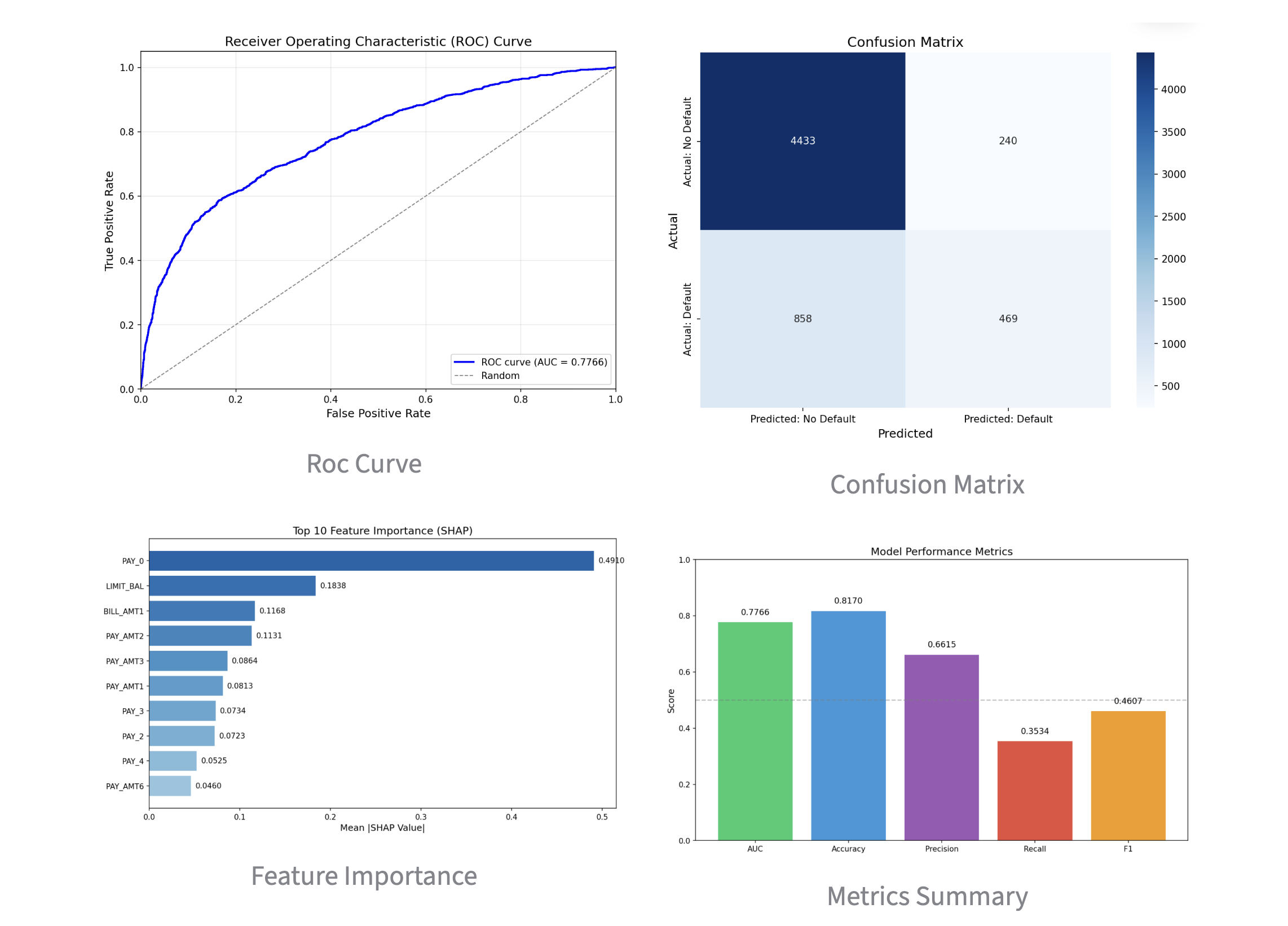

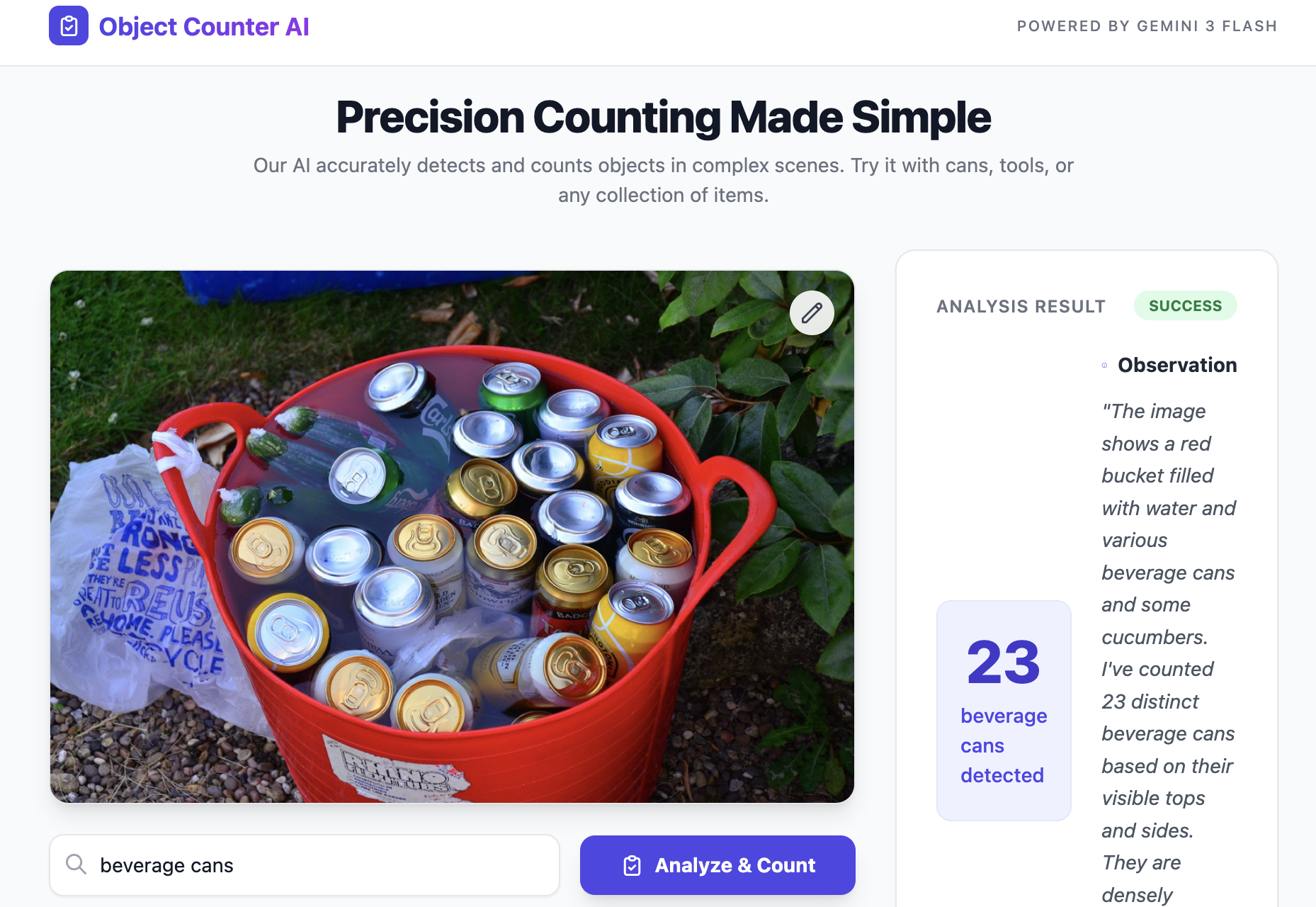

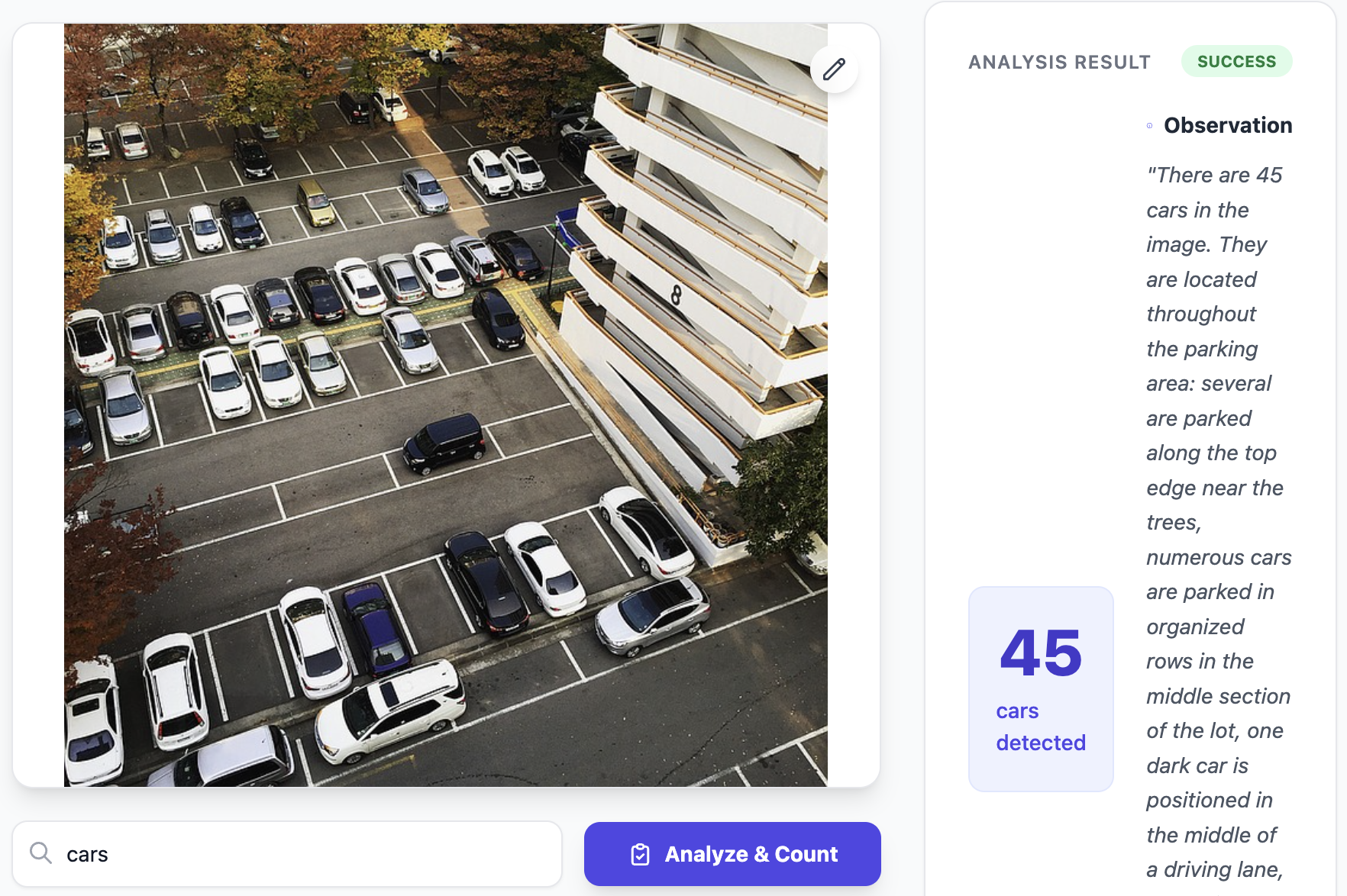

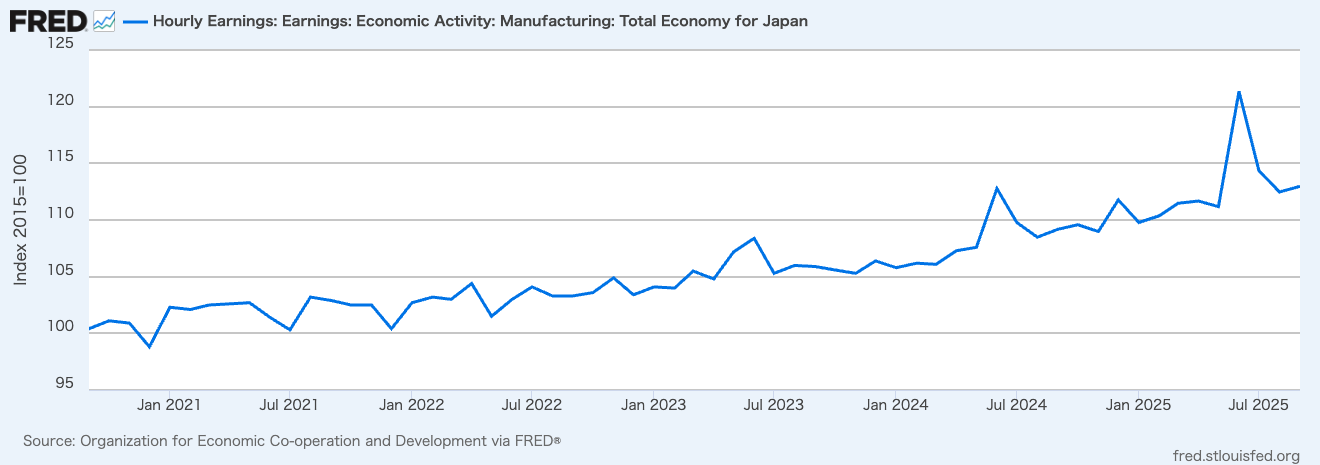

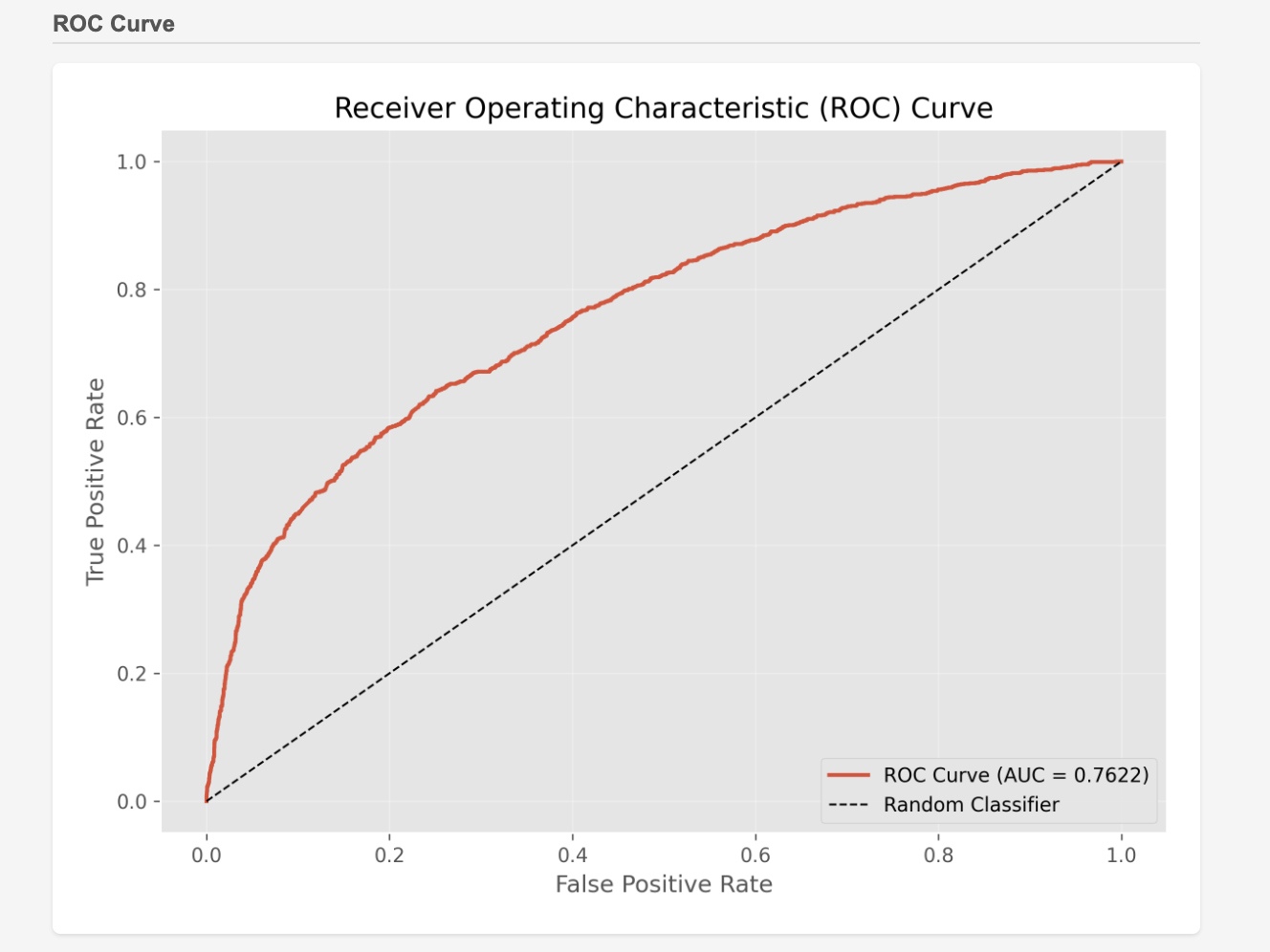

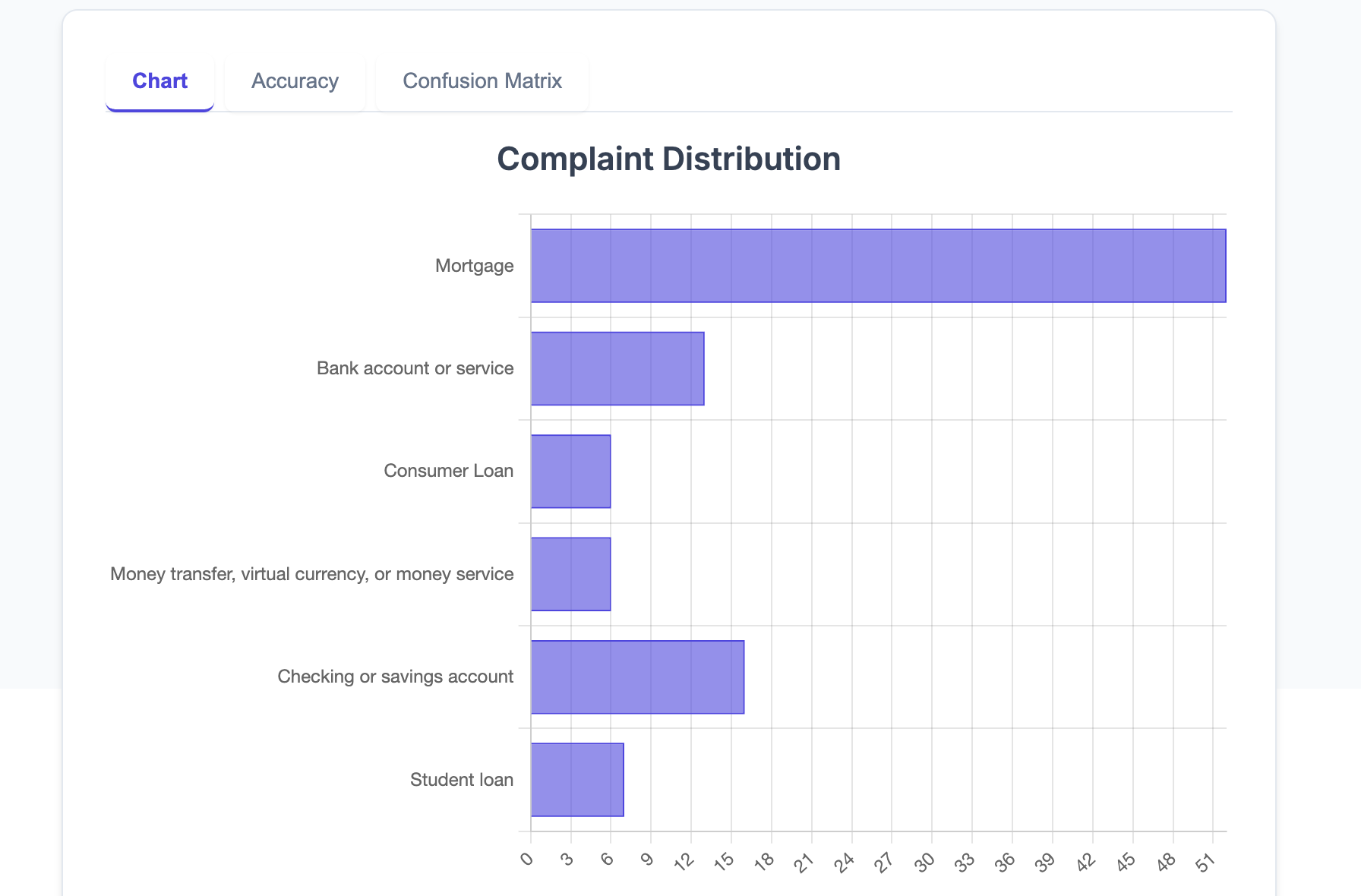

I have built several default prediction models used in the financial industry, and I always feel that development is more efficient when the human side first creates a precise PRD. By doing so, we can largely entrust the actual coding to the AI agent. This is an example of default prediction model.

However, the speed of evolution for frontier models is tremendous. In the latter half of 2026, we expect updates like Gemini 4, GPT-6, and Claude 5, and frankly, it is difficult to even imagine what capabilities AI agents will acquire as a result.

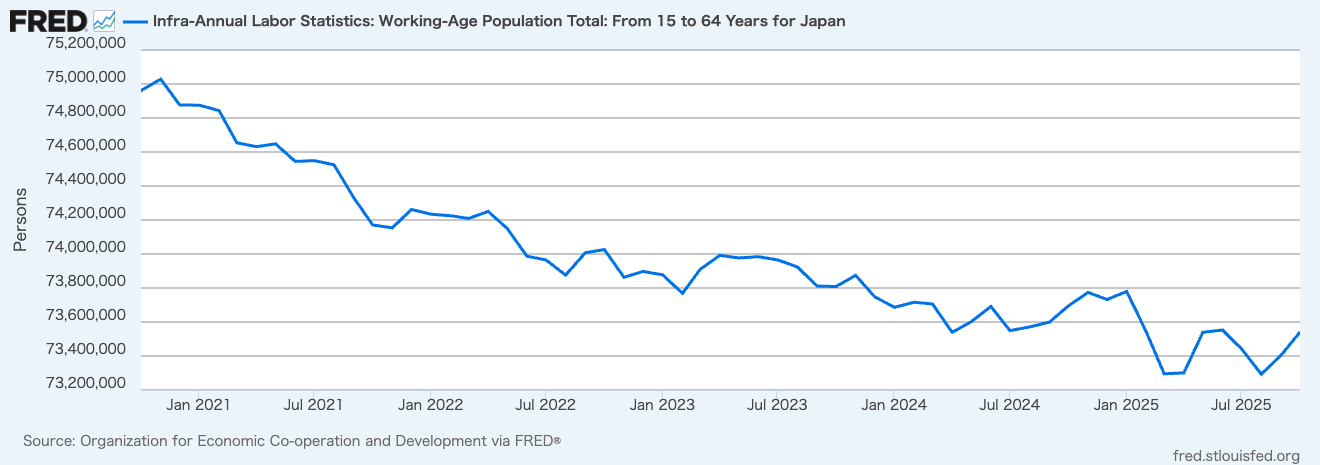

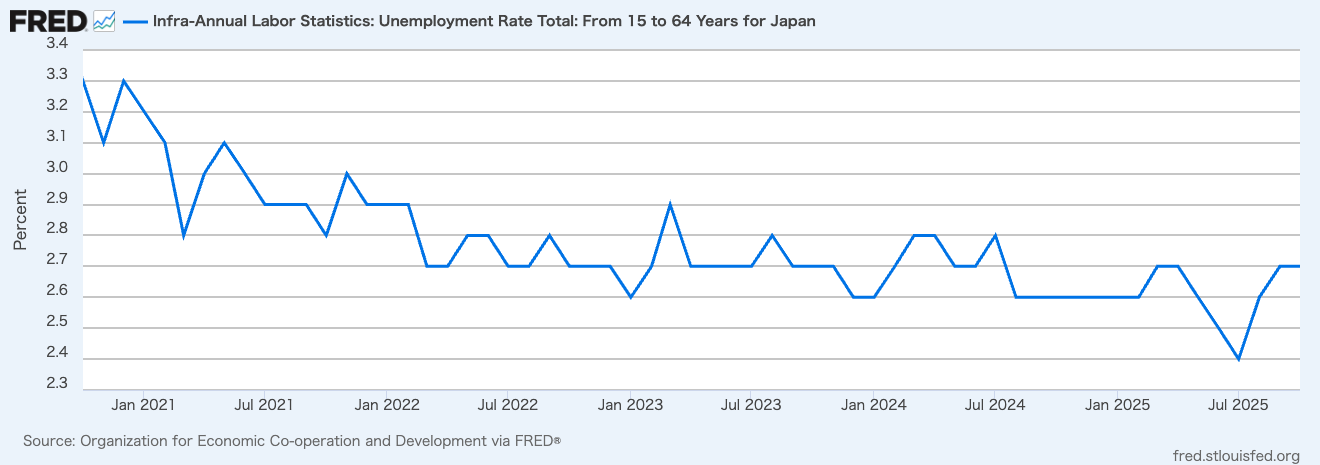

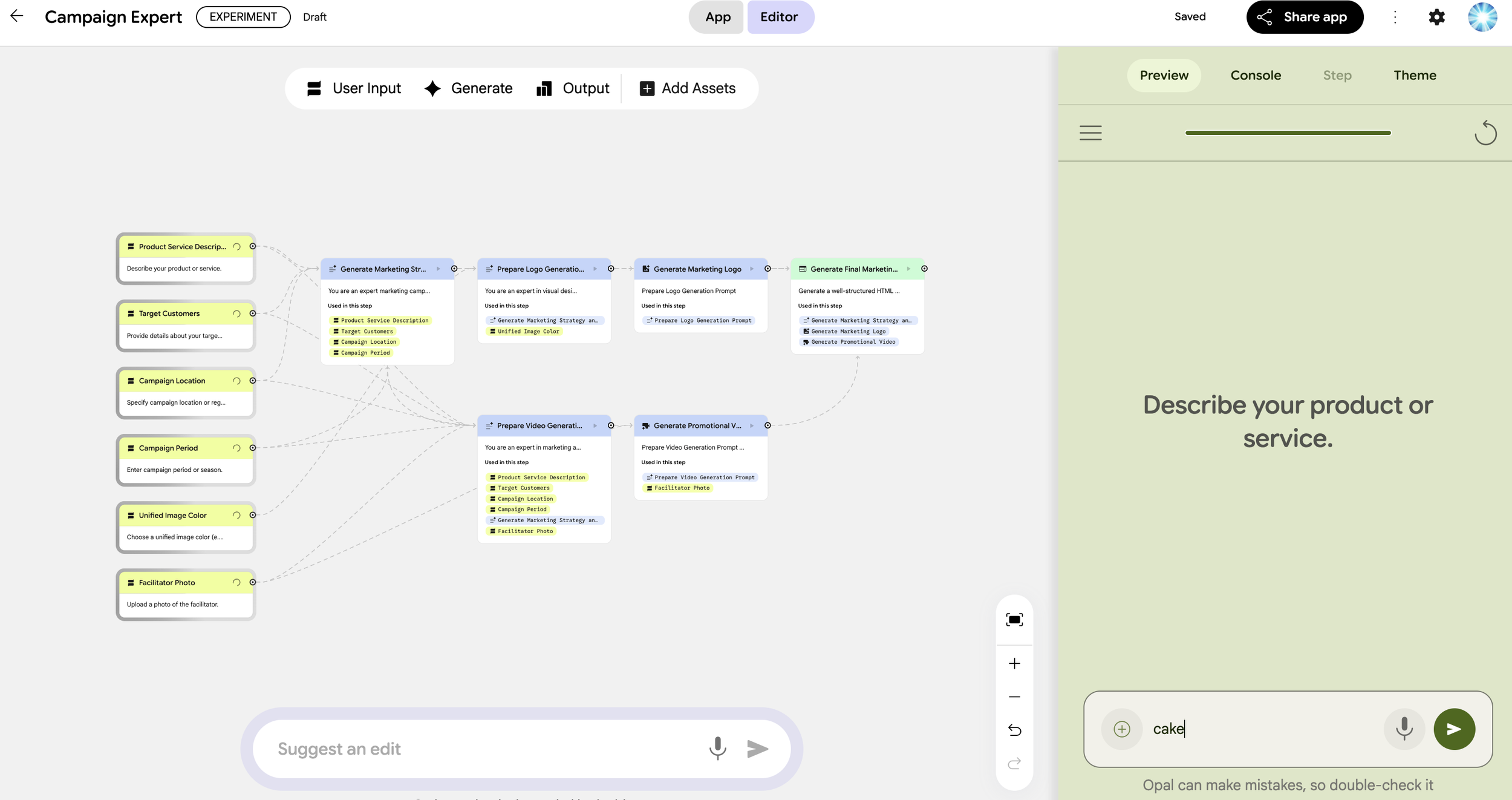

Alongside the progress of these models, the toolsets known as "code assistants" are also likely to significantly improve their capabilities. Tools like Claude Code, Gemini CLI, Cursor, and Codex have become indispensable for programmers today, but in 2026, these code assistants will likely play an active role in fields closer to business, such as machine learning and economic analysis.

At this point, calling them "code assistants" might be off the mark; a broader name like "Thinking Machine for Business" might be more appropriate. The day when those who don't know how to code can master these tools may be close at hand. It is very exciting.

3. AI Agents and Governance

As mentioned above, it is predicted that in 2026, AI agents will increasingly permeate large organizations such as corporations and governments. However, there is one thing we must be careful about here.

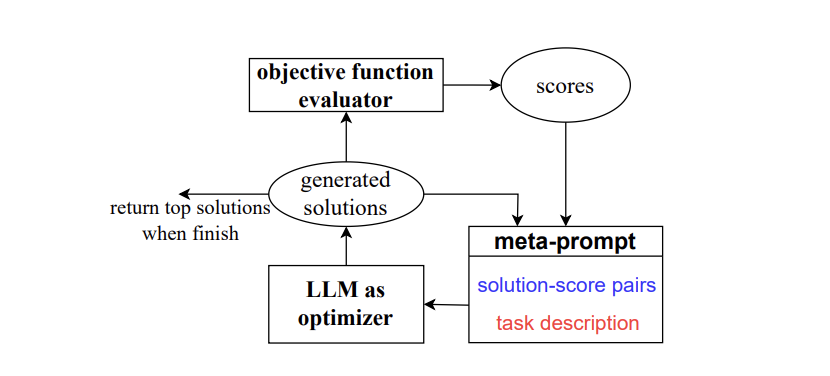

The behavior of AI agents changes probabilistically. This means that different outputs can be produced for the same input, which is vastly different from current systems. Furthermore, if an AI agent possesses the ability for Recursive Self-Improvement (updating and improving itself), it means the AI agent will change over time and in response to environmental changes. In 2026, we must begin discussions on governance: how do we structure organizational processes and achieve our goals using AI agents that possess characteristics unlike any previous system? This is a very difficult theme, but I believe it is unavoidable if humanity is to securely capture the benefits and gains from AI agents. I previously established corporate governance structures in the financial industry, and I hope to contribute even a little based on that experience.

What did you think? It looks like AI evolution will accelerate even further in 2026. I hope we can all enjoy it together. I look forward to another great year with you all.

You can enjoy our video news ToshiStats-AI from this link, too!

1) Introducing Nano Banana Pro, Google, Nov 20, 2025

2) Genie 3: A new frontier for world models, Jack Parker-Holder and Shlomi Fruchter, Google DeepMind, August 5, 2025

Copyright © 2026 Toshifumi Kuga. All right reserved

Notice: ToshiStats Co., Ltd. and I do not accept any responsibility or liability for loss or damage occasioned to any person or property through using materials, instructions, methods, algorithms or ideas contained herein, or acting or refraining from acting as a result of such use. ToshiStats Co., Ltd. and I expressly disclaim all implied warranties, including merchantability or fitness for any particular purpose. There will be no duty on ToshiStats Co., Ltd. and me to correct any errors or defects in the codes and the software.