2025 was a year where I actively incorporated "Vibe Coding" into machine learning. After repeated trials, I encountered situations where coding accuracy was inconsistent—sometimes good, sometimes bad.

Therefore, in this experiment, I decided to use Claude Code "Plan Mode" (1) to automatically generate an implementation plan via an AI agent before generating the actual code. Based on this plan, I will attempt to see if a machine learning model can be built stably using "Vibe Coding." Let's get started!

1. Generating an Implementation Plan with Claude Code "Plan Mode"

Once again, I would like to build a model that predicts in advance whether a customer will default (on a loan, etc.). I will use publicly available credit card default data (2). For the code assistant, I am using Claude Code, and for the IDE, the familiar VS Code.

To provide input to the Claude Code AI agent, I summarized the task and implementation points into a "Product Requirement Document (PRD)." This is the only document I created.

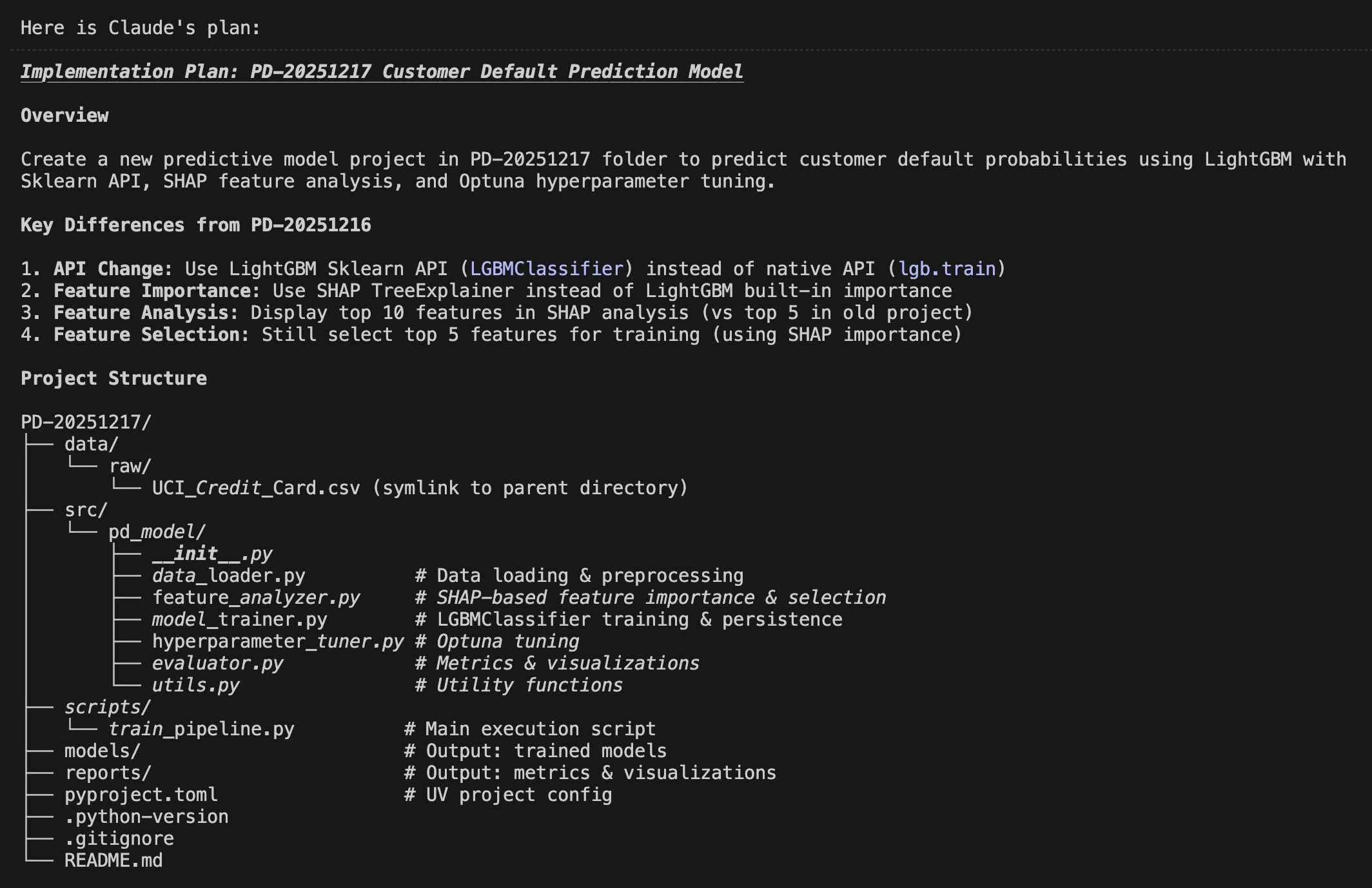

I input this PRD into Claude Code "Plan Mode" and instructed it to: "Create a plan to create predictive model under the folder of PD-20251217".

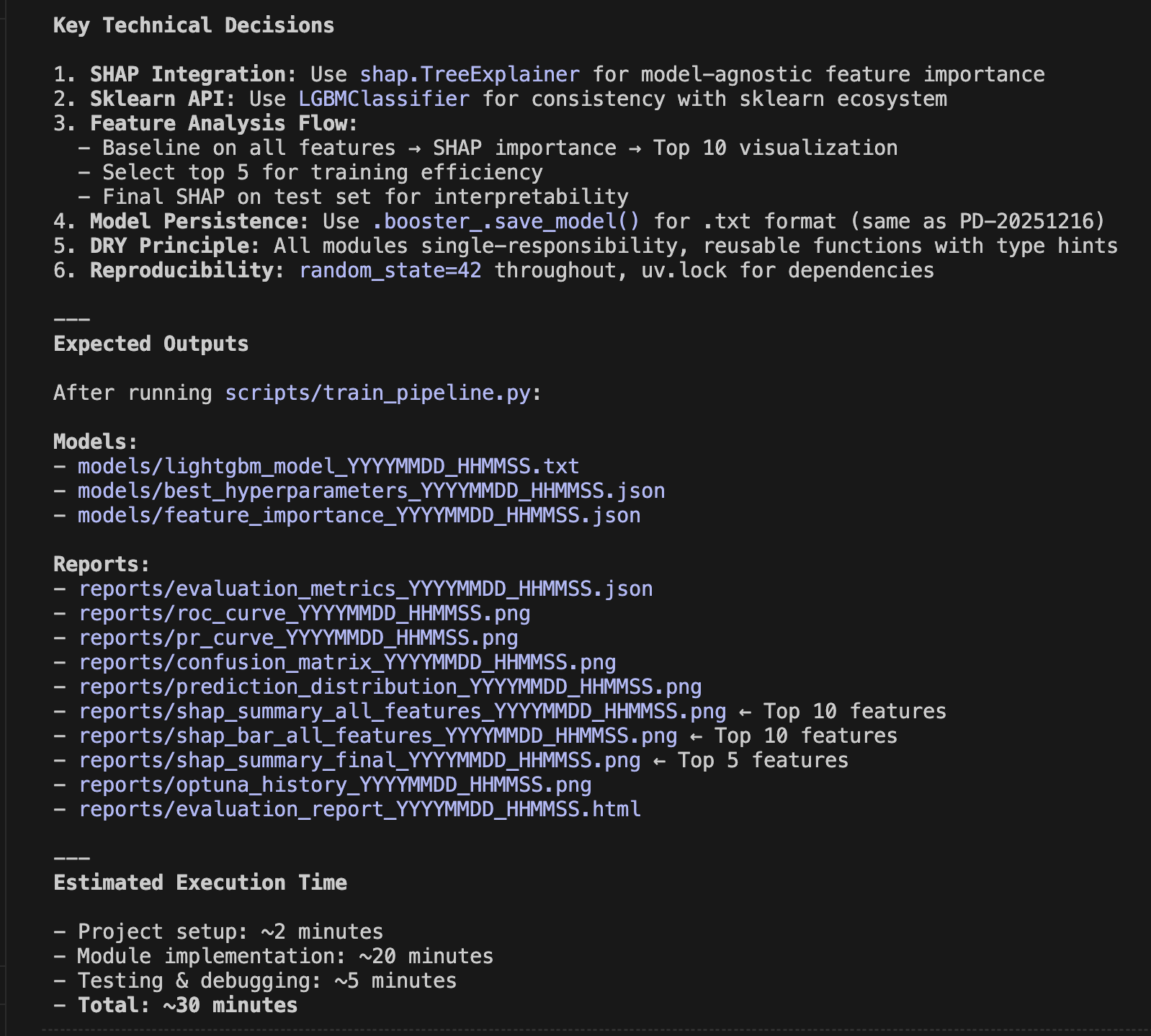

Within minutes, the following implementation plan was generated. Comparing it to the initial PRD, you can see how refined it is. Note that I am only showing half of the actual plan generated here—a truly detailed plan was created. I can only say that the ability of the AI agent to envision this far is amazing.

2. Beautifully Visualizing Prediction Accuracy

When this implementation plan is approved and executed, the prediction model is generated. Naturally, we are curious about the accuracy of the resulting model.

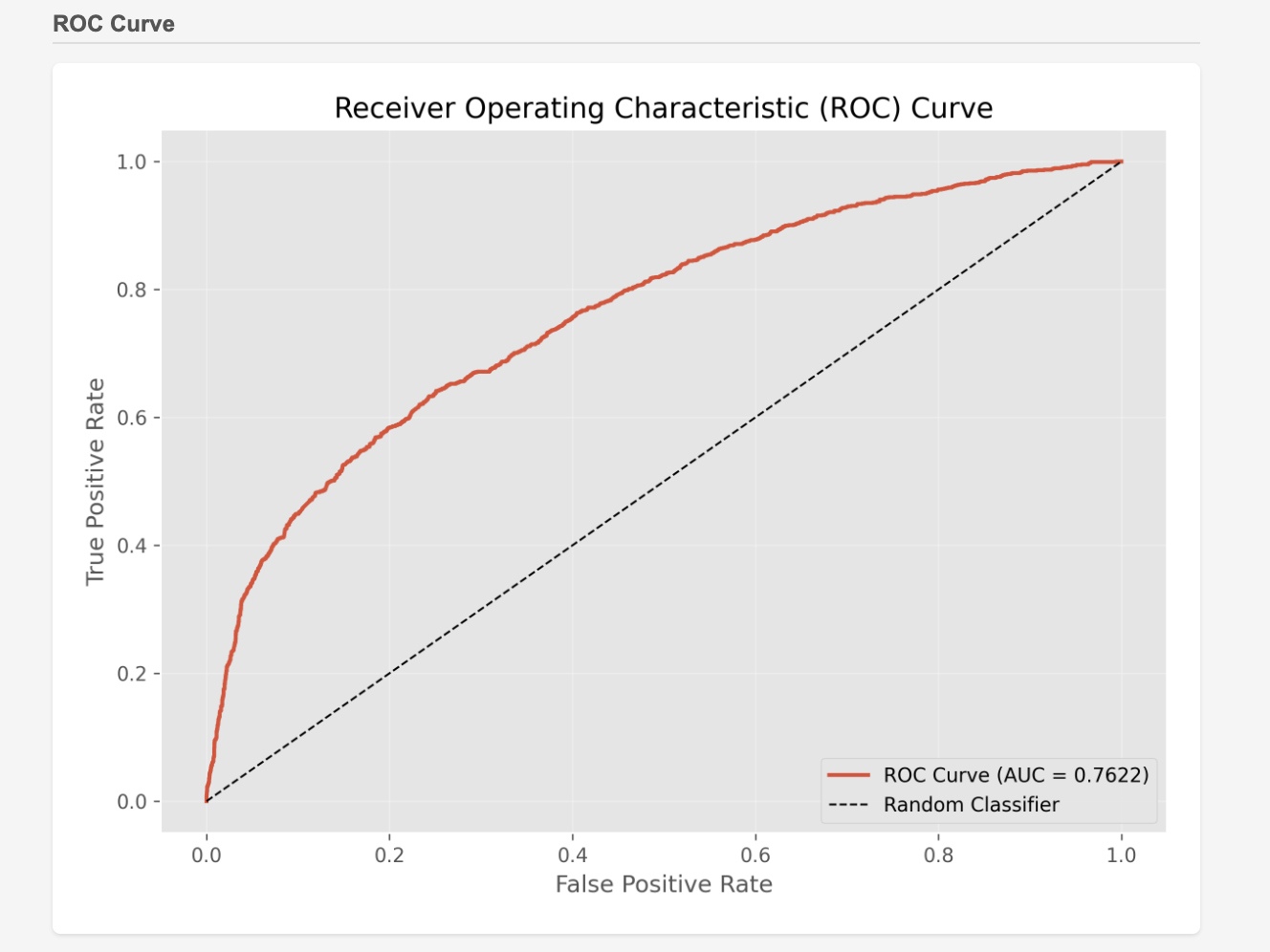

Here, it is visualized clearly according to the implementation plan. While these are familiar metrics for machine learning experts, all the important ones are covered and visualized in an easy-to-understand way, summarized as a single HTML file viewable in a browser.

The charts below are excerpts from that file. It includes ROC curves, SHAP values, and even hyperparameter tuning results. This time, the total implementation time was about 10 minutes. If it can be generated automatically to this extent in that amount of time, I’d rather leave it to the AI agent.

3. Meta-Prompting with Claude Code "Plan Mode"

A Meta-Prompt refers to a "prompt (instruction to AI) used to create and control prompts."

In this case, I called Claude Code "Plan Mode" and instructed it to "generate an implementation plan" based on my PRD. This is nothing other than executing a meta-prompt in "Plan Mode."

Thanks to the meta-prompt, I didn't have to write a detailed implementation plan myself; I only needed to review the output. It is efficient because I can review it before coding, and since that implementation plan can be viewed as a highly precise prompt, the accuracy of the actual coding is expected to improve.

To be honest, I don't have the confidence to write the entire implementation plan myself. I definitely want to leave it to the AI agent. It has truly become convenient!

How was it? Generating implementation plans with Claude Code "Plan Mode" seems applicable not only to machine learning but also to various other fields and tasks. I definitely intend to continue trying it out in the future. I encourage everyone to give it a challenge as well.

That’s all for today. Stay tuned!

You can enjoy our video news ToshiStats-AI from this link, too!

1) How to use Plan Mode, Anthropic

2) Default of Credit Card Clients

Copyright © 2025 Toshifumi Kuga. All right reserved

Notice: ToshiStats Co., Ltd. and I do not accept any responsibility or liability for loss or damage occasioned to any person or property through using materials, instructions, methods, algorithms or ideas contained herein, or acting or refraining from acting as a result of such use. ToshiStats Co., Ltd. and I expressly disclaim all implied warranties, including merchantability or fitness for any particular purpose. There will be no duty on ToshiStats Co., Ltd. and me to correct any errors or defects in the codes and the software.